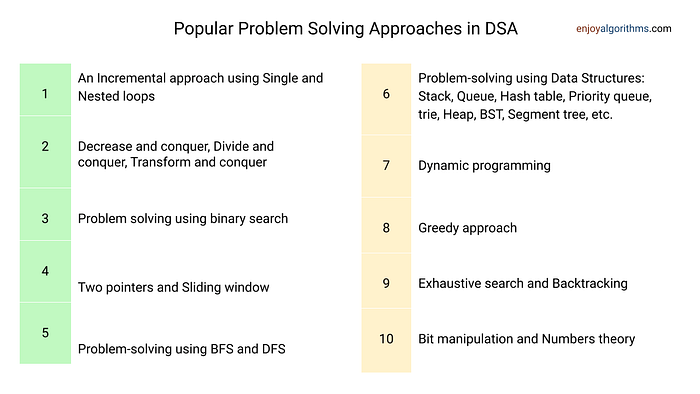

Top Problem-Solving Approaches in Data Structures and Algorithms

In this blog, we have discussed some popular strategies for solving problems in data structures and algorithms. Mastering and applying these strategies can help you more effectively tackle problems and crack the coding interview.

An Incremental approach using Single and Nested loops

One common approach to problem-solving is to build a solution incrementally using a loop. There are several variations of this approach:

- Input-centric strategy: In this approach, we process one input at a time and build the partial solution with each iteration of the loop.

- Output-centric strategy: With this approach, we add one output to the solution at a time and build the partial solution iteratively.

- Iterative improvement strategy: This involves starting with some easily available approximations to a solution and then continuously improving upon them until we reach the final solution.

There are also several approaches based on loops: Using a single loop and variables, Using nested loops and variables, Incrementing the loop by a constant (more than 1), Using the loop twice (double traversal), Using a single loop and a prefix array (or extra memory), etc.

Suggested coding problems to explore

- Roman to Integer

- Leaders in an array

- Valid mountain array

- FizzBuzz problem

- Insertion, bubble and selection sort

- Find max and min in an array

- Sort an array in a waveform

- Equilibrium index of an array

- Find product except self

- Rotate matrix by 90 degrees

- Spiral traversal of matrix

- Find row with max ones

Decrease and Conquer Approach

The decrease-and-conquer strategy involves solving a problem by finding the solution of a one subproblem. This typically leads to a recursive algorithm, which breaks the problem down into smaller input sizes and continues to do so until it reaches a base case.

Suggested coding problems to explore

- Euclid algorithm of finding GCD

- Binary search algorithm

- Josephus problem

- Searching in binary search tree

- Insertion in binary search tree

- Deletion in binary search tree

- Quick-select algorithm to find kth smallest

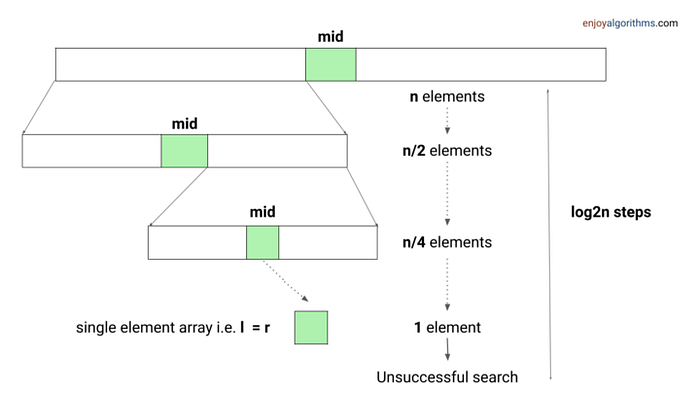

Problem-solving using Binary Search

The binary search idea can be useful for efficiently solving searching problems when the array has some order property, such as being sorted. With this approach, we can find a solution in O(logn) time complexity.

To use binary search, we will need to modify the standard binary search algorithm based on the specific conditions of the problem. The core idea is to calculate the mid-index and iterate over the left or right half of the array.

Suggested coding problems to explore

- Find the square root of an integer

- Search in rotated sorted array

- Fixed point in array

- Search a sorted 2D matrix

- Median of sorted arrays

- First and last position of element in sorted array

- Find maximum in increasing and decreasing array

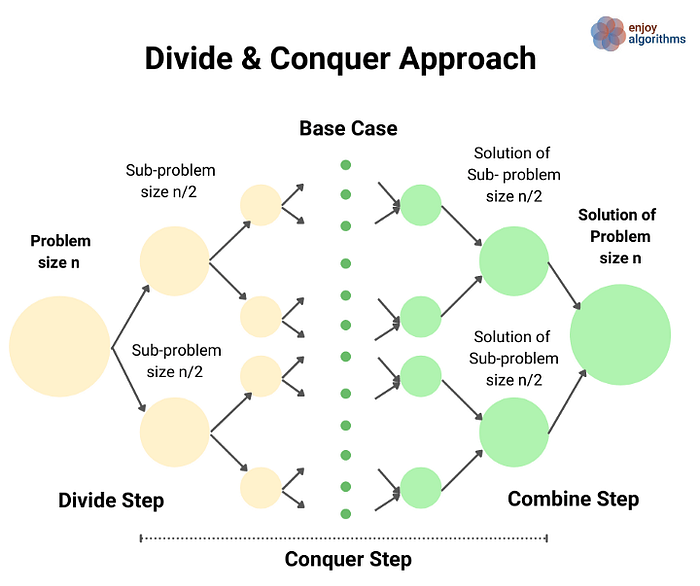

Divide and Conquer Approach

The divide-and-conquer strategy involves breaking a problem down into multiple subproblems, solving each of them, and then combining their solutions to get a solution to the original problem. This approach can be an effective way to solve many fundamental problems in DSA.

By dividing the problem into smaller pieces, it becomes easier to tackle each piece individually and then combine the solutions to get a final solution to the overall problem.

Suggested problems to explore

- Merge sort algorithm

- Quick sort algorithm

- Divide and conquer solution of maximum subarray sum

- Divide and conquer solution of finding max difference in array

- Divide and conquer solution of finding max and min in array

- Divide and conquer solution of finding majority element in array

- Divide and conquer idea of building segment tree

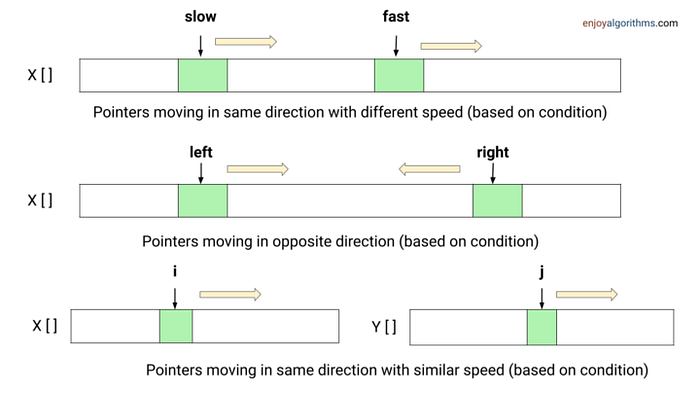

Two Pointers Approach

The two-pointer approach is a useful strategy for optimizing time and space complexity in problems involving searching on arrays and linked lists. It uses pairs of indices or pointers to simultaneously iterate over two different input parts, which allows us to perform fewer operations.

There are three variations of this approach:

Pointers are moving in the same direction with the same pace

- Merging two sorted arrays

- Find intersection of two arrays

- Find intersection of two sorted inked lists

- Checking an array is a subset of another array

Pointers are moving in the same direction at a different pace (Fast and slow pointers)

- Partition process in the quick sort

- Remove duplicates from the sorted array

- Find the middle node in a linked list

- Detect loop in a linked list

- Dutch national flag problem

- Move all zeroes to the end

- Remove nth node from list end

Pointers are moving in the opposite direction

- Check pair sum in an array

- Finding triplet with zero-sum

- Rainwater trapping problem

- Container with most water

Sliding Window Approach

The sliding window concept is a common approach used to solve problems involving arrays or strings. It involves defining a window as a contiguous sequence of elements, defined by start and end indices. We can perform operations on elements within the window and then “slide” it in a forward direction by incrementing the left or right end.

This approach can be effective when the problem involves tasks that must be performed on a contiguous block of a fixed or variable size. It can also be useful for improving time complexity by converting a nested loop solution into a single loop solution.

Suggested problems to explore

- Longest substring without repeating characters

- Count distinct elements in every window

- Max continuous series of 1s

- Find max consecutive 1’s in an array

Transform and Conquer Approach

The transform-and-conquer approach involves solving a problem by first transforming it into another problem that is easier to solve. This approach is broken down into two stages: the transformation stage and the conquering stage.

In the transformation stage, we take the original problem and modify it in some way that makes it easier to solve. In the conquering stage, we then solve the transformed problem.

Example problems: Pre-sorting based algorithms (Finding the closest pair of points, checking whether all the elements in a given array are distinct, etc.)

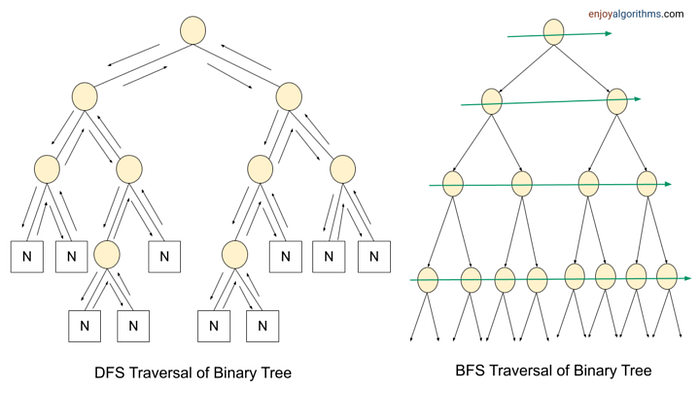

Problem Solving using BFS and DFS Traversal

Many tree and graph problems can be solved using either depth-first search (DFS) or breadth-first search (BFS) traversal. If we need to search for something closer to the root or source node, we can use BFS. On the other hand, if we need to search more deeply, we can use DFS.

In some cases, we can use either BFS or DFS regardless of the order of the nodes. However, in other cases, the choice of traversal method can make a significant difference in terms of efficiency. It’s important to consider the specific use case when deciding which traversal method to use.

In binary tree problems, there are four different types of traversal:

- Preorder traversal: This type of traversal visits the root node first, followed by the left subtree and then the right subtree. It’s useful when we need to explore all the tree nodes before looking at any leaves.

- Inorder traversal: This type of traversal visits the left subtree first, followed by the root node and then the right subtree. It’s useful when we are working with a binary search tree (BST) and we want to generate the node’s data in increasing order.

- Postorder traversal: This type of traversal visits the left subtree first, followed by the right subtree and then the root node. It’s useful when we need to explore all the leaf nodes before looking at any internal nodes.

- Breadth-first search (BFS) traversal: This type of traversal uses a queue to explore the nodes level by level. It can be useful when we need to find specific information about a particular level of the tree.

To solve tree and problems, sometimes we use these strategies with traversal:

- We use additional variables or pointers as function parameters when we need to store some information between recursive calls. This is useful when we want to pass information back up the recursive call stack.

- Helper functions are useful when we want to break a complex problem into smaller, more manageable parts. These helper functions can perform specific tasks that are needed to solve the overall problem.

- Parent pointers can be useful when we need to keep track of the relationship between a node and its parent in a tree. This can be helpful when we need to traverse up the tree to find certain information.

- Storing additional data inside the nodes can be useful when we want to keep track of certain information while traversing a tree or graph. For example, we may want to store whether a node has been visited during a search, or we may want to store the minimum distance from a particular node to the root.

- Data structures like stacks, queues, and priority queues can be useful when we need to store and access data in a specific order. For example, we can use a stack to store nodes that we have visited during a depth-first search, or we can use a queue to store nodes that we need to visit during a breadth-first search.

Suggested problems to explore

- Find min depth of a binary tree

- Merge two binary trees

- Find the height of a binary tree

- Validate Binary Search Tree

- Convert sorted array to balanced BST

- Find absolute minimum difference in a BST

- The kth largest element in a BST

- Course scheduling problem, bipartite graph

- Find the left view of a binary tree

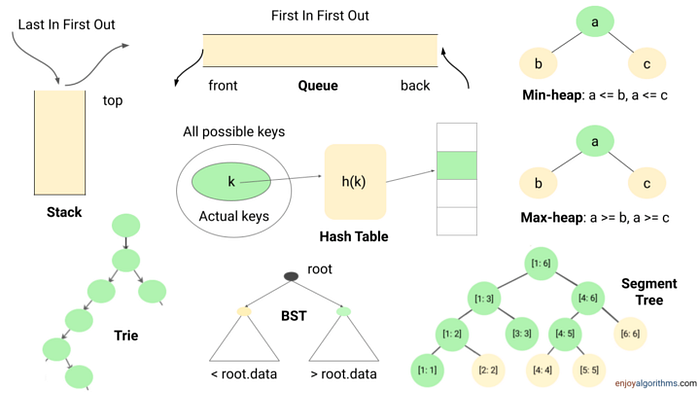

Problem-solving using Data Structures

The data structure is one of the powerful tools of problem-solving in algorithms. It helps us perform some of the critical operations efficiently and improves the time complexity of the solution. Here are some of the key insights:

- Many coding problems require an efficient way to perform the search, insert and delete operations. We can perform all these operations using the hash table in O(1) time average. It’s a kind of time-memory tradeoff, where we use extra space to store elements in the hash table to improve performance.

- Other times, we may need to store data in a stack (last in, first out) or queue (first in, first out) to solve certain problems.

- If we need to continuously insert or remove the maximum or minimum element (or an element with the highest or lowest priority), we can use a heap (or priority queue) to do so efficiently.

- Other data structures that can be useful in specific cases include Trie, AVL Tree, and Segment Tree, which can help us perform certain operations efficiently.

Suggested problems to explore

- Next greater element

- Valid parentheses

- Largest rectangle in a histogram

- Valid anagram

- Largest subarray with 0 sum

- Sliding window maximum

- kth smallest element in an array

- Most frequent element

- Top k frequent elements

- Longest common prefix

- Range sum query

- Sort characters by frequency

- Longest consecutive sequence

- Check equal array

Data structure design questions

- Implement queue using stacks

- Implement stack using queues

- Least frequently used (LFU) cache

- Least recently used (LRU) cache

- Design bloom filter

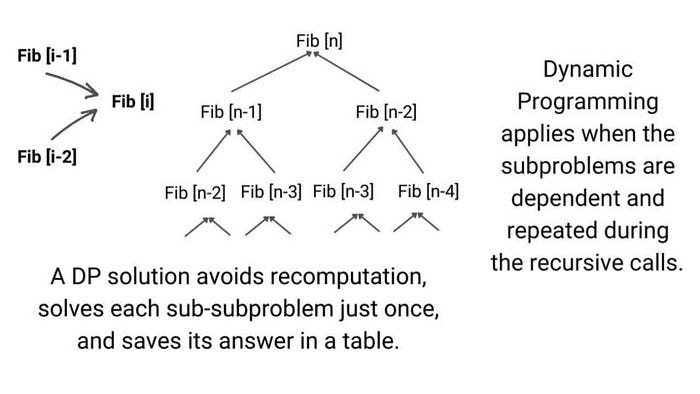

Problem Solving using Dynamic Programming

Dynamic programming is a technique for solving problems that have overlapping or repeating subproblems. Instead of solving these subproblems repeatedly, we solve each smaller subproblem just once and store the result in memory.

This can be a very effective way to solve optimization and counting problems. By storing the results of smaller subproblems and reusing them, we can avoid a lot of unnecessary computation and improve the efficiency of our solution.

Suggested problems to explore

- Climbing stairs problem

- Maximum subarray sum

- Longest common subsequence

- Minimum number of jumps to reach end

- Minimum coin change

- Edit distance problem

- Count unique binary search trees

Problem Solving using Greedy Approach

The greedy algorithm is a method for solving optimization problems by constructing a solution incrementally, making the locally optimal choice at each step. The hope is that a sequence of these locally optimal choices will lead to a globally optimal solution for the whole problem.

In this approach, we take the best available option at each step and add it to our partially constructed solution, without violating the constraints of the problem. This can be an effective method in some cases, but it’s important to note that it doesn’t always work. While it’s generally not too difficult to design a greedy algorithm, it can be more challenging to prove that it produces an optimal solution.

Suggested problems to explore

- Fractional knapsack problem

- Dijkstra algorithm of finding shortest path in graph

- Activity selection problem

- Huffman coding algorithm of data compression

- Prims algorithm of finding minimum spanning tree in graph

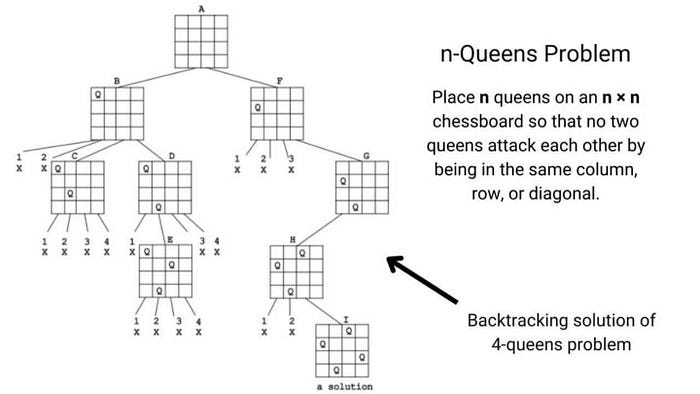

Problem Solving using Backtracking

Backtracking is a method for generating a solution by avoiding unnecessary possibilities. The main idea behind backtracking is to construct a solution one piece at a time and evaluate each partial solution as follows:

- If the partial solution can be developed further without violating the constraints of the problem, we do so by taking the first valid option available at the next stage.

- If there are no valid options at the next stage, meaning that the constraints of the problem would be violated, we backtrack to the previous stage and try the next option for that stage instead.

Suggested problems to explore

Problem-solving using Bit manipulation and Numbers theory

Some coding problems are inherently mathematical in nature, but sometimes we need to identify and exploit the mathematical properties of a problem in order to solve it efficiently. In these cases, techniques from number theory and bit manipulation can be very helpful.

Bit manipulation involves understanding the binary representation of the input data and processing it at the level of individual bits. This can lead to more efficient solutions, as computers can perform bit-wise operations very quickly. In some cases, bit manipulation can even eliminate the need for extra loops and improve performance by a significant margin.

Suggested problems to explore

Hope you enjoyed the blog. Later we will write a separate blog on each problem-solving approach. Enjoy learning, Enjoy algorithms!

For more content, explore our free DSA course and coding interview blogs. If you want to learn live, explore these two courses:

If you have any queries/doubts/feedback, please write us at contact@enjoyalgorithms.com.

Originally published at https://www.enjoyalgorithms.com/blog/problem-solving-approaches-in-data-structures-and-algorithms/